Visiting Ritsumeikan University for a study on personal and national cybersecurity

Shunichi Ishihara, CHL/Speech and Language Lab, visited Ritsumeikan University for two months under the RU-ANU Research Fellowship Scheme. Under the topic of 'Threats to Personal and National Cybersecurity: Misuse of Generative AI in Identity Deception in Japanese,' he investigated the ability of generative AI to mimic the authorial characteristics of individuals in Japanese. This study is a spin-off from the four-year project 'Development of a Japanese Authorship Attribution System for Digital Forensics (JSPS (C): 23K11107).'

Generative artificial intelligence (AI) has brought significant benefits to society, improving efficiency and convenience. However, it can also be exploited for malicious purposes. In fields such as social media, cybercrime, forensic science, and cybersecurity, ‘deepfake’ technologies have raised concerns as potential threats to personal and national security. Deepfake content is often indistinguishable from authentic material and has been used in harmful activities like revenge porn, fake news, and financial fraud.

Natural language generation (NLG) technology, a prominent application of generative AI, can produce human-like text that is difficult to distinguish from text written by actual humans. While impressive, this capability can also be misused. Concerns have emerged that NLG systems, such as those powered by generative AI, could facilitate large-scale social engineering attacks, including identity deception and the creation of persuasive phishing emails or fake news.

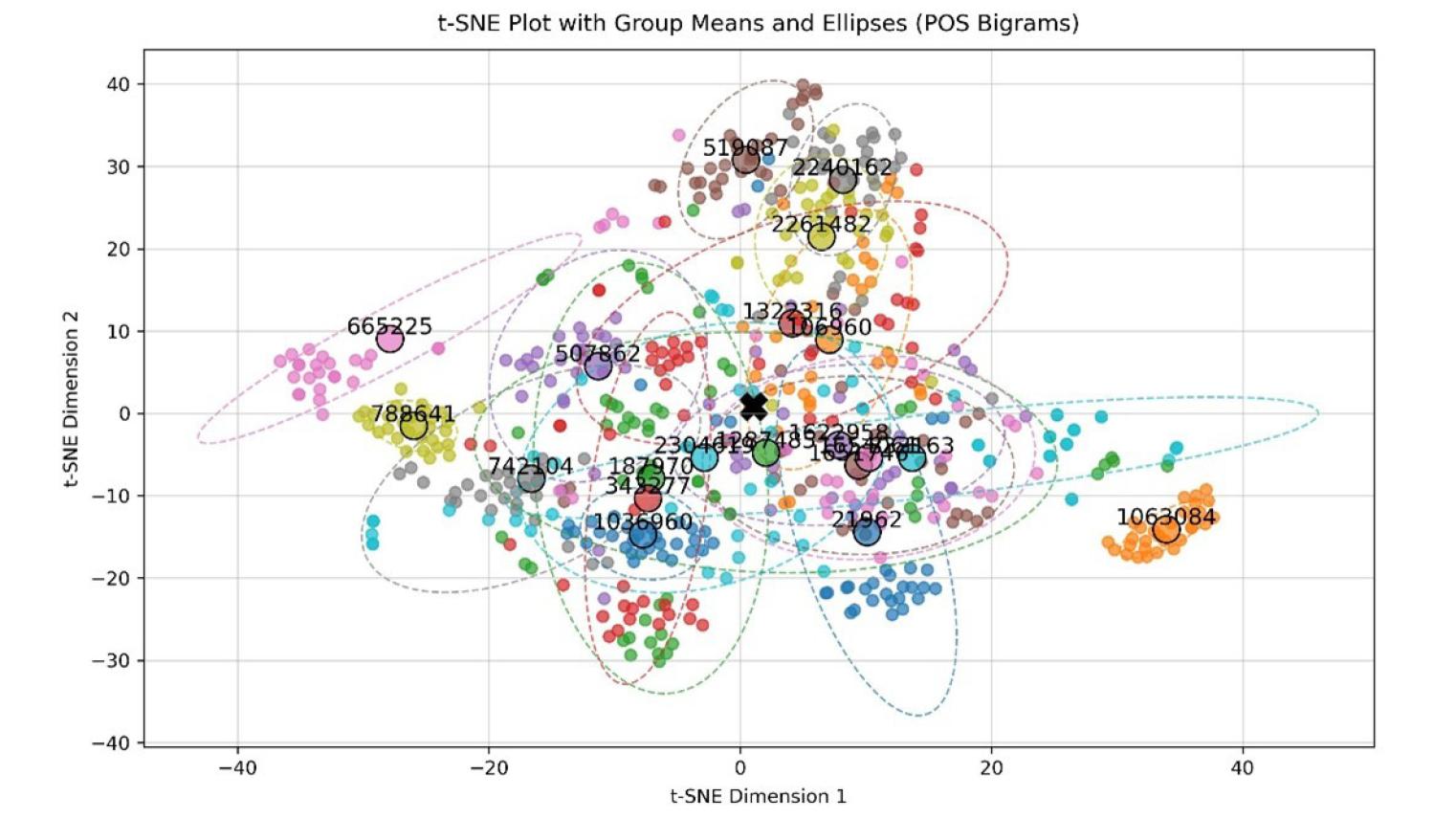

This study explores generative AI’s ability to mimic individual writing styles using ChatGPT, a representative NLG system. Forensic text comparison experiments were conducted to compare human-written texts with those generated by ChatGPT, trained to imitate specific authors' writing styles in Japanese. Key findings include:

- ChatGPT can mimic writing styles in some cases, as shown by numerical comparisons.

- Some mimicking is inaccurate, as ChatGPT occasionally copies content directly from its training data.

- In many instances, even untrained human readers can easily distinguish machine-generated texts from human-written ones.

- Overall, ChatGPT's ability to impersonate individual writing styles is limited.

During the visit, several research networks were successfully built or strengthened.